How AI is Revolutionizing Live Production

The role of artificial intelligence (AI) in live production has grown a lot in the last couple of years. Operations once requiring complete teams of editors, subtitlers, data analysts, and graphics operators are now often controlled in real-time by machines. From synthetic anchors and emotional tagging to automated highlights, real-time translation, and targeted ads, the production is changing within broadcasting, streaming, and sports. AI has evolved from being used predominantly as a post-production tool to being the central system that manages live content from creation to broadcasting/streaming. This revolution is altering the way news is covered, sports are broadcast, and international audiences are reached.

It is forecast that the global AI in media and entertainment sector will reach over $99 billion by 2030, growing at over a 25% compound yearly growth rate. The majority will be fueled by live content where scalability, speed, and personalization are critical. Broadcasters, streamers, and sporting associations are the early adopters as they aim to reduce costs while delivering more immersive experiences to viewers.

The most noticeable advancement has been the rise of synthetic hosts. In 2018, China’s state-run Xinhua News Agency unveiled the first AI anchor, modeled after real journalists, delivering news round the clock in both Chinese and English. South Korea’s MBN followed in 2020 with an AI version of anchor Kim Ju-ha. These avatars replicate human voice and appearance and are increasingly connected to emotion-tagging engines.

Software such as Affectiva (bought by Smart Eye) and Microsoft Azure’s emotion API are able to evaluate audience sentiment in real time. They monitor facial responses through webcam or phone-based engagement metrics and modify delivery tone, expression, and rhythm to maintain the interest of viewers/consumers. Studies indicate that emotion-aware content can increase viewership retention by as much as 17%, providing broadcasters with a crucial edge in an oversaturated streaming ecosystem.

Synthetic anchors are an important for broadcasters because they make content localization, translation, and dissemination possible without the human resource expenses involved with live anchors in multiple time zones. Synthetic co-hosts are being used by some Chinese regional stations to present weather forecasts and financial news bulletins, with European trials taking place in specialist late-night television programming.

Breakdown of language barriers is one of the most important uses. YouTube Live and professional broadcast software also employ AI today for real-time closed captioning and translation. Real-time translation has now been pushed by vendors. Big tech firms are now providing near-instant captioning of their languages. Latency of less than three seconds for English to Spanish and English to French languages has been pushed to over 90% accuracy in the past two years.

The Paris Olympics in 2024 was a landmark. OBS made use of AI-based captioning for live streams, automatically transcribing to 16 languages for online platforms. With more than 3 billion views online, AI-enabled subtitles were instrumental in reaching multilingual communities.

RTVE, Spain’s pubcaster, tested AI-assisted subtitling in LaLiga streams, ensuring domestic football broadcast to audiences across Latin America more easily. Start-ups that were initially designed for multilingual conferencing, KUDO and Interprefy, are quickly finding their ways into live broadcast. Their systems can accommodate simultaneous voice translation for esports finals, global concerts, and corporate livestreams, dramatically expanding audience reach without an increase in cost proportion.

The AI live translation media market size is anticipated to grow to $1.5 billion by 2027, according to MarketsandMarkets, due to broadcasters opting more for automated subtitling rather than human captioning staff.

Sports broadcasting is a wonderful testing ground for graphics using AI. Stats Perform and WSC Sports, among other companies, utilize machine learning to analyze live data and generate on-screen graphics.

The Thursday Night Football on Prime Video was “the first to embrace ‘Prime Vision with Next Gen Stats,’ AI-driven overlays that track movements of players, display predicted win probabilities and suggest plays.” Such features featured a 12% increase in average watch time among younger viewers, according to internal data from Amazon.

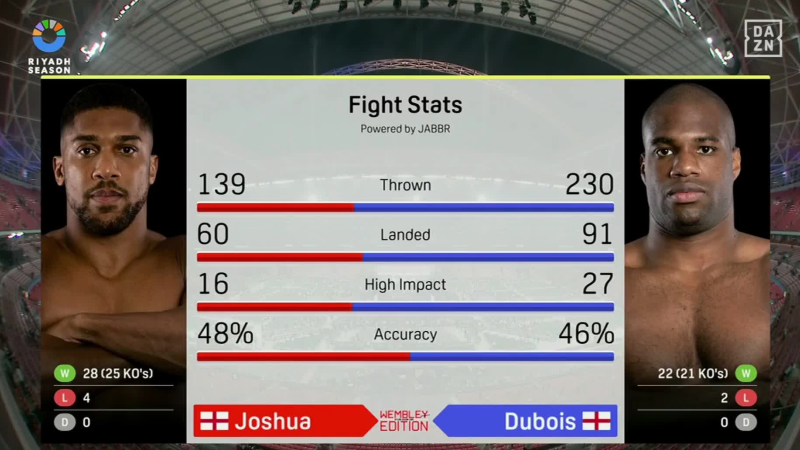

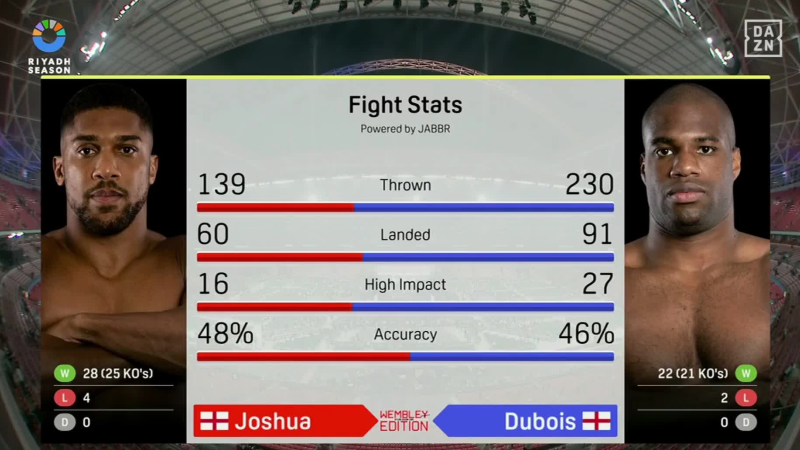

DAZN started using AI-based graphics to host boxing and football coverage in Europe. Real-time data on punches, heat maps, and approximate scorecards are automatically generated. Such systems are also used by broadcasters like Bloomberg and CNBC: AI collects financial data and creates instant charts while live segments are being aired, sometimes faster than human producers can respond to them.

The impact on efficiency is tremendous. In a 2023 report Deloitte reported that AI-based graphics systems reduce production staffing expenses by up to 30% for live sports broadcasters and increase turnaround time by over 50%.

Creating highlight reels used to take hours of post-production time. Today, AI is able to output them in seconds. Twitch began experimenting with AI highlight clipping in 2023 by training models to detect surges in chat activity, streamer emotional facial reactions, and gameplay statistics. Streamers who used automated clipping saw a 22% boost in clip views, according to Twitch, which led to faster content sharing on social media.

Professional sports leagues have used similar technology. LaLiga Tech, the innovation unit of Spain’s LaLiga, created AI highlight engines tailored to individual users’ inclinations. A Real Madrid supporter in Mexico might receive a 90-second reel that has all the goals of their favorite team; a fantasy footballer in the UK receives highlights centered on relevant data like player statistics.

WSC Sports, a pioneer in this field, currently serves more than 40 sports leagues worldwide, including the NBA, Bundesliga, and ESPN. The firm raised $100 million in funding in 2022, demonstrating investor interest in automated video creation. Its systems generate more than 13 million customized sports highlights monthly.

The transformation is particularly evident in live streaming, where AI turns a passive broadcast into an interactive and personalized experience. With 5G minimizing latency to below 20 milliseconds in Europe, single streams can be distributed nearly in real-time. BT Sport (now TNT Sports) in the UK and Movistar+ in Spain are testing AI systems that enable viewers to switch between alternative feeds: tactical overheads, player POV cameras, or even drone shots.

Formula 1 has been a pioneer. Its F1 TV Pro app allows fans to watch whole races in a driver’s eye view, with AI picking out the most thrilling moments. Machine learning algorithms analyze overtaking opportunities, pit strategies, and fan engagement to decide what clips to display.

Amazon’s Thursday Night Football will also include “X-Ray” features, which the AI foretells plays most likely to happen and suggests alternative angles for replay. Initial trials proved greater user interaction for viewers under 35, a demographic that traditional television cannot keep.

By 2028 over 40% of live sports will be customized for each viewer’s requirements, compared to less than 10% now, according to a PwC survey,

Monetization is also evolving with AI. Dynamic ad insertion can refresh ads in real-time depending on the location, demographics, and even on-screen events while watching a live stream. Large advertisers are beginning to invest big money into AI-facilitated live ad placements using Google Ad Manager, Amazon’s Freevee, and The Trade Desk.

European sports betting companies have quickly adopted this technology. For Euro 2024, there were some digital feeds that used AI engines to pick up goals or red cards and automatically place certain bets directly. Early stats from AdTech companies show that contextual ads powered by AI increase click-through rates by 15% to 20% compared to static ads.

Meanwhile, streaming platforms are exploring AI to test hundreds of ad variations in real time. Netflix, which launched its ad-supported tier in 2022, is considering predictive ad targeting that connects with audience emotions during content playback.

AI is no longer just an invisible tool. It’s now part of the live content creation process. AI translates, creates graphics, edits highlights, optimizes personalized streams, and optimizes monetization, all in real-time.

For broadcasters, there are many benefits: reduced costs of operation, scalable content creation, and greater international reach. For audiences, the experience is more intimate—with subtitle options in real-time, highlight moments tailored to them, multiple angles, and overlays that heighten the live experience.

As infrastructure like 5G and edge computing advance, the line that has been drawn between production and consumption will be blurred even further. AI will not respond to what viewers want but instead will guess these preferences even before a click occurs. The future of live broadcasting is no longer only controlled by a human but is being increasingly steered by AI, changing not only content production but also the viewing experience.

But using AI is not about replacing human creativity - it enhances it. It frees producers, directors, and creators from technical and trivial labor so they can focus on storytelling, scripting, and high-level creative decisions. The AI anchor reads the transcript, but a human reporter writes it. AI cuts up the excerpts, but a person gets the heart. This potent collaboration between human judgment and AI is crafting a more vibrant, accessible, and personalized media environment, so that wherever you are or whatever you’re watching, your live experience is specially designed for you.

The most noticeable advancement has been the rise of synthetic hosts. In 2018, China’s state-run Xinhua News Agency unveiled the first AI anchor, modeled after real journalists, delivering news round the clock in both Chinese and English. South Korea’s MBN followed in 2020 with an AI version of anchor Kim Ju-ha. These avatars replicate human voice and appearance and are increasingly connected to emotion-tagging engines.

Software such as Affectiva (bought by Smart Eye) and Microsoft Azure’s emotion API are able to evaluate audience sentiment in real time. They monitor facial responses through webcam or phone-based engagement metrics and modify delivery tone, expression, and rhythm to maintain the interest of viewers/consumers. Studies indicate that emotion-aware content can increase viewership retention by as much as 17%, providing broadcasters with a crucial edge in an oversaturated streaming ecosystem.

Synthetic anchors are an important for broadcasters because they make content localization, translation, and dissemination possible without the human resource expenses involved with live anchors in multiple time zones. Synthetic co-hosts are being used by some Chinese regional stations to present weather forecasts and financial news bulletins, with European trials taking place in specialist late-night television programming.

Breakdown of language barriers is one of the most important uses. YouTube Live and professional broadcast software also employ AI today for real-time closed captioning and translation. Real-time translation has now been pushed by vendors. Big tech firms are now providing near-instant captioning of their languages. Latency of less than three seconds for English to Spanish and English to French languages has been pushed to over 90% accuracy in the past two years.

The Paris Olympics in 2024 was a landmark. OBS made use of AI-based captioning for live streams, automatically transcribing to 16 languages for online platforms. With more than 3 billion views online, AI-enabled subtitles were instrumental in reaching multilingual communities.

RTVE, Spain’s pubcaster, tested AI-assisted subtitling in LaLiga streams, ensuring domestic football broadcast to audiences across Latin America more easily. Start-ups that were initially designed for multilingual conferencing, KUDO and Interprefy, are quickly finding their ways into live broadcast. Their systems can accommodate simultaneous voice translation for esports finals, global concerts, and corporate livestreams, dramatically expanding audience reach without an increase in cost proportion.

The AI live translation media market size is anticipated to grow to $1.5 billion by 2027, according to MarketsandMarkets, due to broadcasters opting more for automated subtitling rather than human captioning staff.

Sports broadcasting is a wonderful testing ground for graphics using AI. Stats Perform and WSC Sports, among other companies, utilize machine learning to analyze live data and generate on-screen graphics.

The Thursday Night Football on Prime Video was “the first to embrace ‘Prime Vision with Next Gen Stats,’ AI-driven overlays that track movements of players, display predicted win probabilities and suggest plays.” Such features featured a 12% increase in average watch time among younger viewers, according to internal data from Amazon.

DAZN started using AI-based graphics to host boxing and football coverage in Europe. Real-time data on punches, heat maps, and approximate scorecards are automatically generated. Such systems are also used by broadcasters like Bloomberg and CNBC: AI collects financial data and creates instant charts while live segments are being aired, sometimes faster than human producers can respond to them.

The impact on efficiency is tremendous. In a 2023 report Deloitte reported that AI-based graphics systems reduce production staffing expenses by up to 30% for live sports broadcasters and increase turnaround time by over 50%.

Creating highlight reels used to take hours of post-production time. Today, AI is able to output them in seconds. Twitch began experimenting with AI highlight clipping in 2023 by training models to detect surges in chat activity, streamer emotional facial reactions, and gameplay statistics. Streamers who used automated clipping saw a 22% boost in clip views, according to Twitch, which led to faster content sharing on social media.

Professional sports leagues have used similar technology. LaLiga Tech, the innovation unit of Spain’s LaLiga, created AI highlight engines tailored to individual users’ inclinations. A Real Madrid supporter in Mexico might receive a 90-second reel that has all the goals of their favorite team; a fantasy footballer in the UK receives highlights centered on relevant data like player statistics.

WSC Sports, a pioneer in this field, currently serves more than 40 sports leagues worldwide, including the NBA, Bundesliga, and ESPN. The firm raised $100 million in funding in 2022, demonstrating investor interest in automated video creation. Its systems generate more than 13 million customized sports highlights monthly.

The transformation is particularly evident in live streaming, where AI turns a passive broadcast into an interactive and personalized experience. With 5G minimizing latency to below 20 milliseconds in Europe, single streams can be distributed nearly in real-time. BT Sport (now TNT Sports) in the UK and Movistar+ in Spain are testing AI systems that enable viewers to switch between alternative feeds: tactical overheads, player POV cameras, or even drone shots.

Formula 1 has been a pioneer. Its F1 TV Pro app allows fans to watch whole races in a driver’s eye view, with AI picking out the most thrilling moments. Machine learning algorithms analyze overtaking opportunities, pit strategies, and fan engagement to decide what clips to display.

Amazon’s Thursday Night Football will also include “X-Ray” features, which the AI foretells plays most likely to happen and suggests alternative angles for replay. Initial trials proved greater user interaction for viewers under 35, a demographic that traditional television cannot keep.

By 2028 over 40% of live sports will be customized for each viewer’s requirements, compared to less than 10% now, according to a PwC survey,

Monetization is also evolving with AI. Dynamic ad insertion can refresh ads in real-time depending on the location, demographics, and even on-screen events while watching a live stream. Large advertisers are beginning to invest big money into AI-facilitated live ad placements using Google Ad Manager, Amazon’s Freevee, and The Trade Desk.

European sports betting companies have quickly adopted this technology. For Euro 2024, there were some digital feeds that used AI engines to pick up goals or red cards and automatically place certain bets directly. Early stats from AdTech companies show that contextual ads powered by AI increase click-through rates by 15% to 20% compared to static ads.

Meanwhile, streaming platforms are exploring AI to test hundreds of ad variations in real time. Netflix, which launched its ad-supported tier in 2022, is considering predictive ad targeting that connects with audience emotions during content playback.

AI is no longer just an invisible tool. It’s now part of the live content creation process. AI translates, creates graphics, edits highlights, optimizes personalized streams, and optimizes monetization, all in real-time.

For broadcasters, there are many benefits: reduced costs of operation, scalable content creation, and greater international reach. For audiences, the experience is more intimate—with subtitle options in real-time, highlight moments tailored to them, multiple angles, and overlays that heighten the live experience.

As infrastructure like 5G and edge computing advance, the line that has been drawn between production and consumption will be blurred even further. AI will not respond to what viewers want but instead will guess these preferences even before a click occurs. The future of live broadcasting is no longer only controlled by a human but is being increasingly steered by AI, changing not only content production but also the viewing experience.

But using AI is not about replacing human creativity - it enhances it. It frees producers, directors, and creators from technical and trivial labor so they can focus on storytelling, scripting, and high-level creative decisions. The AI anchor reads the transcript, but a human reporter writes it. AI cuts up the excerpts, but a person gets the heart. This potent collaboration between human judgment and AI is crafting a more vibrant, accessible, and personalized media environment, so that wherever you are or whatever you’re watching, your live experience is specially designed for you.